In addition to measuring speed, Geekbench AI also attempts to measure accuracy, which is important for machine-learning workloads that rely on producing consistent outcomes (identifying and cataloging people and objects in a photo library, for example).

Credit:

Andrew Cunningham

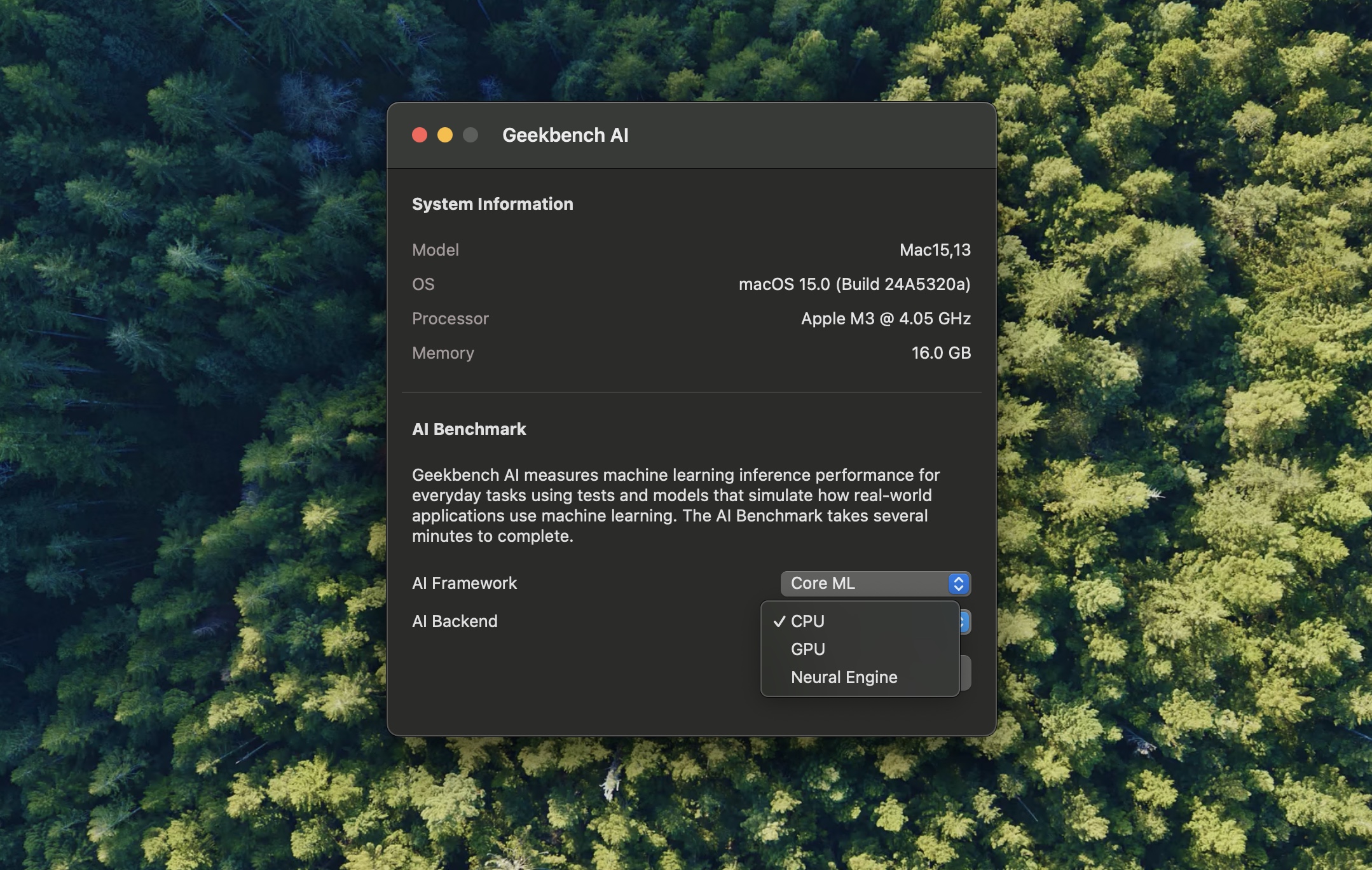

Geekbench AI can run AI workloads on your CPU, GPU, or NPU (when you have a system with an NPU that’s compatible).

Credit:

Andrew Cunningham

Geekbench AI supports several AI frameworks: OpenVINO for Windows and Linux, ONNX for Windows, Qualcomm’s QNN on Snapdragon-powered Arm PCs, Apple’s CoreML on macOS and iOS, and a number of vendor-specific frameworks on various Android devices. The app can run these workloads on the CPU, GPU, or NPU, at least when your device has a compatible NPU installed.

On Windows PCs, where NPU support and APIs like Microsoft’s DirectML are still works in progress, Geekbench AI supports Intel and Qualcomm’s NPUs but not AMD’s (yet).

“We’re hoping to add AMD NPU support in a future version once we have more clarity on how best to enable them from AMD,” Poole told Ars.

Geekbench AI is available for Windows, macOS, Linux, iOS/iPadOS, and Android. It’s free to use, though a Pro license gets you command-line tools, the ability to run the benchmark without uploading results to the Geekbench Browser, and a few other benefits. Though the app is hitting 1.0 today, the Primate Labs team expects to update the app frequently for new hardware, frameworks, and workloads as necessary.

“AI is nothing if not fast-changing,” Poole continued in the announcement post, “so anticipate new releases and updates as needs and AI features in the market change.”